MiXA: A Musical Annotation System

Abstract

We present a Web-based musical annotation system named MiXA(MusicXML Annotator). The system enables users to associate any elementof musical content, such as a note, a lyric, and a title, with someadditional information such as chords, comments, and impressions. MiXAcan also handle annotations created by multiple users, and theseannotations are managed separately for each annotator. This allowsapplication systems for annotations to deal with various commentscreated by the different annotators. Since the content and form ofannotation data depend on applications or services, the system allowsapplication developers to define their own semantics of annotation data.

We also present several application systems, such as musicretrieval and reproduction, based on annotations acquired through MiXA.

1 Introduction

Recently, the demand for advanced use of multimedia contenthas experienced rapid growth. To permit such advanceduse, such as semantic-content-based retrieval and summarization,several studies have been performed on annotation that associatesthe multimedia content with metadata. For example, some researches on the SemanticWeb and Semantic Transcoding , which focuson semantic annotation of Web content, deal withpinpoint retrieval of target content and personalization/adaptation of content according to our characteristics,which change dynamically.

MPEG-7 is one of the recent hot activities onmultimedia annotation. In MPEG-7, a data format is definedthat describes various types of informationrelated to audio-visual content (e.g. scene and objectdescription). The MPEG-7 format enablessemantic retrieval and automated analysis such as objecttracking in frames of multimedia content.

Similarly, musical content works better when some additional informationis accompanied by it. Such information will enable some advancedservices such as retrieval, classification, summarization, etc. Thisshould also lead to the development of some musical annotation systems.Musical annotation data that can be utilized in many applications shouldinclude the following information: information on the musical pieceitself (genre, work year, etc.), and information about the detailedportions of a musical piece (a note, lyrics, etc.).

Since music is an art, interpretations of a musical piece are stronglydependent on people's subjectivity. We think that open globalannotation systems can cover the diversity of the musical pieceinterpretation by collecting many users' subjective data through theInternet. Additionally, since the required information changes accordingto types of services, the annotation system should allow theadministrator to customize the content and format of annotation dataaccording to service requirements.

In this research, we implemented a musical annotation system thatsatisfies these requirements and can be adapted to variousapplications. The following sections describe the MiXA architecture andfunctionality and some application systems using annotation datacollected through MiXA.

2 MiXA: A Musical Annotation System

2.1 XML-based system

We adopt MusicXML as the form for describing music, since itcan describe information sufficiently well to form a score or play themusic. The form of annotation is also described in XML. Hereafter, sucha document is called ``Annotation XML.''

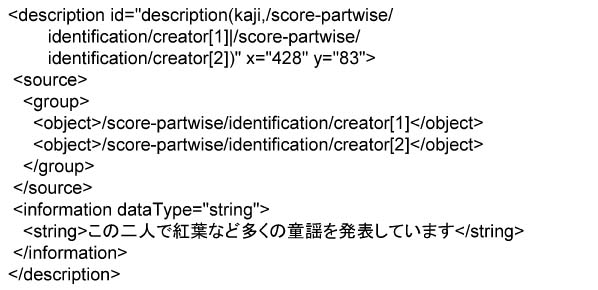

Fugure1: Example of Annotation XML (a part)

Fig.illustrates an annotation of musical description.The object associated with the annotation is describedas XPath (XML Path Language) the in the ``source'' element,while the content of the annotation is in the ``information'' element.In the figure, ``String'' is described as a ``dataType'' attribute, whichmeans that the annotation is written in string. MiXAprepares a number of data types such as ``numeric'' or``chord''. Further details of data types are described in the followingsection.

We developed MiXA (MusicXML Annotator) (pronounced``mixer'') as a musical annotation system.The system can assist in the construction of various musical applicationsystems (e.g. retrieval, summarization, supplementing andcitation) due to its capability of efficiently supplying required information.The functions and the implementation details of thissystem are described in the following sections.

2.2 Flexible definition of annotation according to services

In many conventional systems, the contents and form of annotations arefixed. Consequently, few application systems that employ such annotationhave been realized. To solve this problem, we implemented a scheme thatcan define the form of annotation flexibly according to the service.

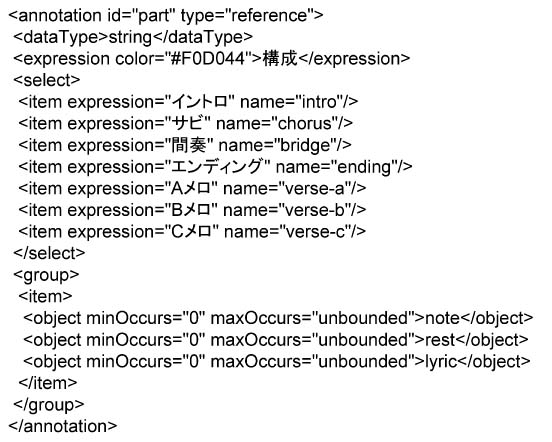

Fugure2: Example of Annotation Definition XML (a part)

For preparation, it is necessary to describe the definition(form and objects that permit association) ofannotation in XML documents. The system then analyzesthe document and collects annotations of the formas the description. Henceforth, this document is called``Annotation Definition XML.''

Fig. represents the definition of musical structure.The annotation data type of is chosen from four varieties:the string type; the numeric type; the boolean type; and the chordtype. The chord type is that which describes harmony.When making the contents of annotation choose, annotationis enumerated in select element.

Objects which allow to be associated with is described in groupelement. In Fig., annotation can associate with theobject set that contains two or more notes, rests, and lyricssimultaneously. At the same time, it is also possible to allowcorrelation of one annotation to another. Like chord progression, thereexists information that cannot be described in one simple annotation. Inthe case like that, such complicated information can be described as aset of simple annotations.

2.3 Annotation through Web browsers

Subjective information (affection, impression, etc.) isessential information for recognizing music semantically.To collect such important annotations from a broad spectrum of people, we designed the system as a Web service.

When aiming at a lot of people, it is necessary to take the reliabilityof annotations into consideration. For this reason, we created at userregister for the system and created an XML profile beforehand. Afterregistering, each user can log-in to the system through a basicauthentication step. Each annotation includes information on the personwho create it, and the system includes a function to specify theannotator, which helps in holding down the number of irresponsibleannotations.

2.4 Music Expression Format

There is research which associates affection with a musical pieceitself , though the impression changes with the part ofthe musical piece the listener recognizes. There is still alack of data that would enable us to create a detailed retrieval systemfor music affection, To solve this problem, annotations should beassociated with detailed objects of music. Consequently, we adopt thescore as the form of music because users can easily select partialobjects of music from a score. Moreover, a user can understand theselected object by performing that chosen part of the musical piece.

Fugure3: Example of score generated by MiXA

Fugure4: Annotation to selected objects

In the score, the annotation related to the musicalobjects is also displayed. Hereafter, these objects are called``Annotatoin Objects.''

The following procedures create annotations.First, the user selects the objects that will beassociated to annotation. Next, as in Fig., the user selectsthe annotation type from the menu, after which he or she can describeconcrete information. The annotation menu is dynamicallygenerated according to the selected objects and theAnnotation Definition XML.

If the annotation's data type is of the string or chord type, etc.,that information is added via a sub-window. Besides, if the annotationis selective, information can be selected easily from the annotationmenu.

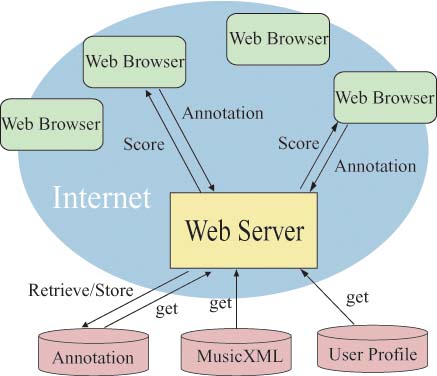

Fugure5: System architecture of MiXA

2.5 System architecture

Fig. shows an outline of MiXA's architecture.

The user logs into this system through basic authentication with a Webbrowser and selects one piece of music from music list orretrieval result. The Web server takes the MusicXML of the demandedmusic from the XML database and transforms it into score described bySVG (Scalable Vector Graphics) . Each object has an XPath,along which each element of MusicXML is pointed. Annotation objectsassociated with the music are also displayed in the score.

To preserve the annotations, the contents of annotation and thecoordinates of a score, etc. are transmitted to a Web server. OneAnnotation XML per user and one content is generated and stored.

3 Application Systems

In this paper, we suppose music retrieval / reconstruction as anapplication system based on annotations. To realize these applications,we have defined five types of annotation: ``Impression''; ``Affection'';``Description''; ``Chord''; and ``Structure''. These types are describedin Annotation Definition XML. Here, ``Structure'' means musicalrough structure such as the intro, the chorus, the bridge and theending.

3.1 Music retrieval system

In this retrieval system, it is possible to retrieve data by chordprogression or keywords using annotation in addition to the informationon the title, lyrics and composer as described by MusicXML. Furthermore,the user can narrow down the search by using the music's roughstructure. Because the system creates a list of results in the highorder of the calculated retrieval rankings, it is possible to shift tothe annotation phase and the reconstruction system (mentioned later)also from this list.

3.1.1 Retrieval by keyword

With retrieval by keyword, the user can input title, composer, lyricist,impression and description via a Web browser. The server receives theretrieval request and conducts its search as follows.

For information on title, lyricist, and composer, it searches thedatabase on which MusicXML is stored, and the corresponding musicalpiece is obtained. For other information, the system searches for themusical piece under two time frames: First, it searches through thedatabase in which annotations are stored to obtain the correspondingannotation(s). Second, the musical piece associated with the annotationis obtained.

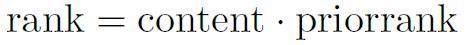

The retrieval rank ``rank'' is the number of appearances n of thekeyword for the same music.

3.1.2 Retrieval by Chord progression

To retrieve a particular chord progression, the user pushes the ``chordprogression'' button for the retrieval form. Using the following method,the system provides a music list retrieved by chord progression. First,a relative value is calculated from the specified chord progression,which is a reference request. For example, ``C G/B Dm C'' is changedrelative to the chord progression ``0,-5/- 1,2m,0.'' Second, for eachmusic content, annotation of a chord is acquired and a chord progressionis created. From the required relative chord progression and the chordprogression retrieved from the annotations, the distance between arequest and the correct response is measured by DP-matching; thedistance decreases with a more correct answer. The rank is a normalizedvalue, which is the inverse of the distance.

Our retrieval algorithm for chord progression is based on the algorithmintroduced in .

3.1.3 Filtering of search results based on musical structure

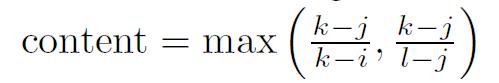

Fugure6: Formula of content calculation

In this retrieval system, it is possible to filter by musical structure,such as with ``music with a sad chorus.''

The server receives user requests for filtering as well askeyword and chord progression requests. If the system receives``music with a sad chorus,'' then ``chorus'' is the musical structureused for filtering. Another retrieval request is``sad'' affection.

Fugure7: Formula of rank calculation

First, the above-mentioned retrieval is performed for keywords or chordprogressions, and the rank of the result is ``priorrank''. Each keywordor chord progression, ``content'' is calculated based on how much of itis contained in the filtering request.

The music object with which annotation of ``chorus'' is associatedranges from to

, and the number of its objects is

. Themusic object with which annotation of ``sad'' is associated ranges from

to

, and the number of its objects is

. The object withwhich these two annotation is associated in common ranges from

to

, and the number of its objects is

. The ``content'' is then calculated in following formula.

The ``content'' become large when many objects associated with the chorusare contained in ``sad,'' or when many objects associated with ``sad''are contained in the chorus. The rank of the final filtering is derivedby the following formula.

Details of these systems are given as follows.

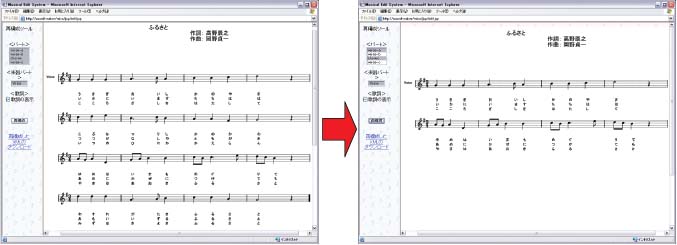

3.2 Music reproduction system

We have also implemented a musical reproduction system.By using the lyrics, the instrumental part, and musical roughstructure (such as the introduction, the chorus, the bridge, and the ending),the user can reconstruct original music to the musicof a favorite composition. For example, if the originalmusic is too long, we can shorten the music, like in an intro-chorus-ending.

Fugure8: Original music (left), After reproduction (right)

First, the user selects one piece of music from the entire music list orretrieval result. The left-hand side of Fig. shows the originalmusic. The user can choose the musical structure, lyrics andinstrumental part from the left frame of the browser. After requestingreproduction, the server creates a MusicXML constructed from the user'srequest and converts it to the SVG form (right-hand side ofFig. ). In addition, the user can download the reconstructedMusicXML.

4 Experimentation

We evaluated MiXA using a series of experiments conducted with the helpof 30 human subjects. This evaluation experiment was designed todetermine whether the system can collect annotations efficiently from anetwork and whether annotations associated to the partial structure of amusical piece can be used for application services.

-

(Q1) Could annotation be created smoothly?

-

(Q2) Was it possible to intuitively select a partial object of a musicalpiece?

-

(Q3)Do you want to use retrieval for the partialstructure of a musical piece?

-

(Q4) Ease of musical piece retrieval by R1.

-

(Q5) Ease of musical piece retrieval by R2.

We implemented a retrieval system based on information associated withthe musical piece itself such as genre, work year, and length of themusic for these experiments. Hereafter, this system is called ``R1''.Theretrieval system using annotation introduced in this paper is called ``R2''.

First, we had the 30 subjects read MiXA's manual page until theymastered the procedure of fundamental annotation. Then they createdannotations to the music of nine traditional songs, and answeredquestions 1 and 2. Following that, they actually searched for themusical pieces by R1 and R2 and answered questions 3, 4, and 5. Thequestions are given as follows.

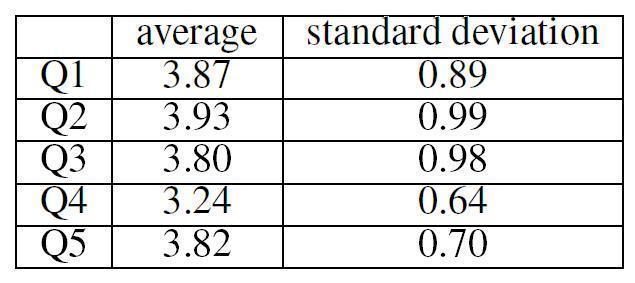

Fugure9: The result of experimentation

Table shows a summary of the experimental results.

Here, Q1 and Q2 are evaluations of whetherannotations could be collected accurately from a network. Theaverage for Q1 was 3.87,with a standard deviation of 0.89.The average for Q2 was 3.93, with a standard deviationof 0.99. By using this system, it could be said thatannotations are effectively collectable from a network.

Questions 3, 4, and 5 are evaluations of whetherannotations for a partial object of a musical piececan be used effectively. The average for Q3 was 3.80, with a standarddeviation of 0.98. From these results, we consider that many people were motivated to retrieve to partial structuresof a musical piece. Moreover, the average for Q4was 3.24 and that for Q5 was 3.82, giving a difference between themof 0.58.

We performed a paired t-test to verify whether there was any significantdifference between R1 and R2; the result was 2.76. Consequently, at a5\% significance levels, we conclude that R2 was more effective thanR1. This means that retrieval using annotations associated with apartial object of a musical piece is superior to retrieval frominformation related to the musical piece itself, such as genre. R2 wasespecially effective when the description of the music desired forretrieval was vague, or the user knew only some part of themusic. Moreover, we received the comment that retrieval by chordprogression will also be helpful for those who are studying a composeror musical theory.

In fact, it could be said that annotations collected by MiXA areeffective in realizing an advanced service.

5 Related Research

5.1 Papipuun

Papipuun is a summarization system based on polyphony, which is itselfbased on time-span reduction in the generative theory of tonal music(GTTM) and the deductive object-oriented database(DOOD). GTTMis a typical theory for analyzing musical structure. By using Papipuun,it is possible to generate a high-quality music summary throughinteraction with a user.

As for preparing a summary, TS-Editor helps the annotator to create apolyphony based on time-span reduction. For example, TS-Editor displaysthe musical piece in a piano roll.

Ideally, according to the kind of annotation associated with a musicalobject, the user should be shown the optimal form.

6 Summary and Further Work

6.1 Use of automatically-generated annotation

Although it is nessesary to employ information that can be used to carryout automatic analysis somewhat mechanically, like a music tone or chordprogression, such information analyzed automatically is not sufficientlyaccurate in many cases. In such cases, however, a user's editing andcorrecting of the analysis result, can produce more accurate data.

Thus, it is possible to considerably reduce the cost of annotation ifthe system with which a user edits and corrects the coarse informationanalyzed automatically is adopted.

This paper described the novelty, meaning, and the methodof the musical annotation system MiXA. MiXA can associateannotations to detailed objects of a musicalpiece by using score. Another feature is that it can be usedby many users asynchronously. Furthermore, it hasfunction in which the form of an annotation, accordingto an application system, can be defined. We also implemented aretrieval/reproduction system using annotations.Evaluation experiments were conducted, and the system's validity was checked.

Some future research items are listed below.

6.2 Another point of view for collecting annotation

Annotation to a score has the advantage that an object that isassociated can be identified precisely; however, the labor of theannotator is likely to increase as a result.

As a result, it is necessary to integrate other techniques to make iteasier to create a history for a musical piece while listening to it.

6.3 Extension of application systems

We are considering developing an online musical education system basedon annotation to deepen students' understanding of musical pieces whileinteracting with their teachers. The aim is to improve the quality ofstudents' practice performance.