Weblog-style Video Annotation and Syndication

Abstract

In this paper, we present a method to extend the mechanisms employed for Weblogs in order to make video contents accessible by Weblog communities. We also present a mechanism that generates Weblog entries from the browsing history of the video contents to link Weblogs with video contents. By extracting knowledge on the video contents from communication among the Weblog communities and syndicating the knowledge in the form of RDF. We can use the mechanism for annotation-based applications, such as a semantic video retrieval and summarization system.

1 Introduction

We present a mechanism to distribute and advertise video contents and form communities centered on those contents effectively on the Internet. Conventional video contents distribution systems handle only the signal processing problem of distributing high-quality video stream with stability, or the problem of defending the profit of contents holder, using methods such as encryption and accounting systems for multimedia contents. These systems do not consider advertisements or the spread of contents. To solve these shortcomings, we propose a video contents distribution system that suits Weblogs \cite{weblog}, which have become popular on the Internet in recent years. Weblog communities are formed by the mechanisms of Trackback \cite{Trackback} and RSS \cite{RSS}, and it is said that their influence is enormous. There is a wide variety of Weblog articles that introduce profitable information and commodities to others using photographs and links to related pages, and these articles are recognized as an effective means of advertising commodities.

In addition, by applying the Weblog mechanism to video contents, this system can activate a community that centers on video contents, and by relating meta information such as Trackback or comments to video contents, we can extract knowledge as video annotations.

2 Weblogizing Online Video Contents

We propose a mechanism that activates video contents and their related communities by replacing a Weblog entry with video contents (and its scenes). Some Weblog sites that treat video contents already exist, and these sites are called ``Videoblogs.'' The method of displaying animation on these Weblogs is to use the HTML Video plug-in. There are some problems associated with this, however, such as the fact that video files are simply too large to download many at a time, and we cannot browse video contents without watching the entire content to confirm what is on the video. Furthermore, we cannot treat the video contents scene-by-scene. To solve these problems, we propose a mechanism to distribute video contents efficiently on the Weblog network.

2.1 Definition of Weblogs

Weblogs feature a mechanism to efficiently distribute frequently updated articles, called ``Entries,'' on the Web. Although there are various opinions about an accurate definition of a Weblog, in this research we target Weblogs that comply with the following four requirements from a technical perspective.

-

Permalink \cite{Aimeur03} : Each entry can be specified by a unique and permanent URI.

-

Annotations: Users can add comments to each entry.

-

Trackback \cite{Trackback}: When user A writes entry A referring to entry B, user A can generate the reverse-link from entry B to entry A explicitly. As a result, we can form an interactive link between the entries.

-

RSS (RDF Site Summary) \cite{RSS}: The system can distribute updated Weblog information in RDF form. .

Linking each entry via these mechanisms, we can activate a community that centers on Weblog entries. These mechanisms have already been adopted and standardized in many of main Weblog services, such as MovableType (http://www.movabletype.org/) or Blogger (http://www.blogger.com/), and we can also use these mechanisms between different Weblog services.

2.2 Problems of Weblogizing Online Video Contents

To ensure that video contents can be included in a Weblog network, this system requires functions similar to existing Weblogs. In short, it is necessary to install the mechanisms of Permalink, Trackback, Annotation, and RSS. We call the application of the Weblog mechanisms to the contents ``weblogizing,'' and we call the weblogized video contents ``Videoblogs.''

In contrast to a general Weblog entry, the meaning and the situation of video contents might be different in each scene. Therefore, we have to consider the following conditions to weblogize the video contents. First, it should be possible to apply Trackback and Annotation, etc. to not only the entire contents, but also to arbitrary scenes. Second, when the user quotes a particular scene in the video, it becomes necessary to quote video scenes in a form by which the content can be understood. Finally, compatibility with existing Weblogs must be high.

In order to weblogize video contents, we have to equip our system with the four requirements and the three conditions listed above. If a unique ID is assigned to the contents, requirement 1 can be easily achieved. In this text, we propose the implementation of requirements 2, 3, and 4.

2.3 Implementation of Annotation for Video Contents

The first necessary mechanism for Videoblog is one with which users can easily make annotations to the video contents. For this we employ iVAS (intelligent Video Annotation Server) \cite{Yamamoto04b}, which is from our previous research.

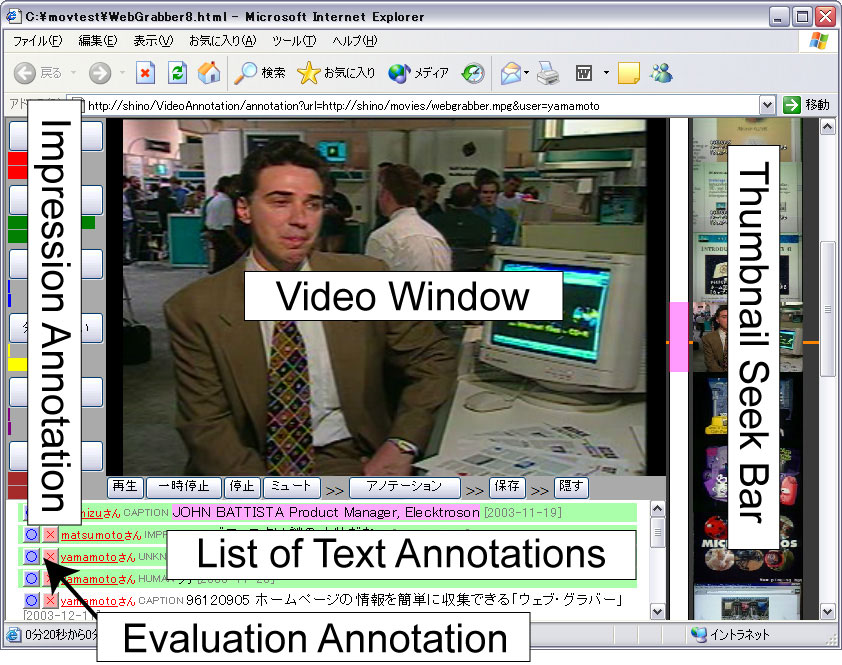

With iVAS the user makes annotations to the online video contents while watching it. We proposed three types of annotation in iVAS, using the electronic bulletin-board interface (figure \ref{fig1}). That system includes text annotation, which is the mechanism with which users can make comments about arbitrary scenes in the video content, impression annotation, which is a mechanism where users can associate video content with subjective impressions on the content by clicking a mouse, and evaluation annotation, with which users can evaluate each text annotation by clicking the O-X buttons.

We can communicate with other users about video contents by using these mechanisms, and we have developed a video retrieval system that employs the annotations of video contents.

図1: Example of Videoblog Annotation

2.4 Implementation of Trackback

Trackback is the second mechanism we apply for Videoblog. Trackback is a structure that automatically links reference heads and the reference origin of entries. Therefore, unlike mutual links in Websites, we can link entries in detail. Furthermore, since Trackback links by human subjectivity, it is a stronger links considering the article's content.

The form of Trackback is standardized \cite{Trackback}, and it transmits the name of the site, the title of the entry, a summary of the entry, and URI information on the entry to the target entry's Trackback URI. This is called ``Trackback ping.'' A Trackback URI is the usually in following forms, and the user specifies the target entry by inputting this URI.

The structure of Videoblog's trackback is the same as that for a Weblog, and is organized by sending a Trackback ping. To transmit Trackback to an arbitrary scene of the video contents, we have to transmit the contents ID, the start time and the end time of the scene. Because this is strictly standardized excluding Trackback URI, in order to bury this information under a Trackback URI, we enhance it into the following forms.

According to this form, a Videoblog can accept a Trackback without changing the existing Weblog.

2.5 Video Annotation Syndication based on RDF

We apply the mechanism of RSS to the video contents as the third mechanism for Videoblogs. We syndicate not only the updated information of contents, but also information such as annotations and trackback in the form of RDF. In concrete terms, by specifying the video contents' URI and the annotation ID to rdf:resource attributes, we can express them in the following forms.

As a result, we can collect annotations distributed to two or more Videoblog servers, and we can use these annotations for various applications. Although general RSS syndicates only the latest updated information, in this system, by syndicating meta information on all contents, we can promote its use for video annotations. Consequently, we can introduce the concept of the Semantic Web into the multimedia contents.

3 Content Syndication based on Videoblog

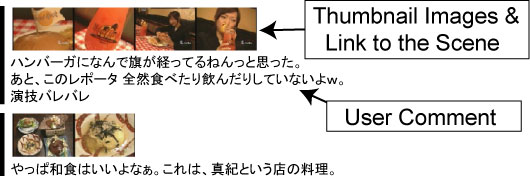

Here we propose a supporting system with which the user can easily quote arbitrary scenes of the video contents and then produce an article concerning those contents. As a result, the user can advertise contents in his or her own Weblog, and can activate the circulation of those contents. In addition, by associating Videoblogs with Weblogs, we can acquire comments in Weblog entries as text annotation of the video contents. In this research, the form of the quotation in the video contents is composed of a link to the scene and a comment on the scene, along with a thumbnail image. An example can be seen in Figure \ref{videoweblog}.

図2: Quotation of Video Contents with Thumbnail Images

3.1 Semi-Automatic Generation of Video Article from Videoblog

We assume that users watch video contents by using iVAS. Because annotations are made in real time in iVAS while the user watches the video contents, we cannot necessarily acquire sufficient annotations. However, if the user likes the contents, he or she will have the desire to write an article about it sooner or later. If the user writes the article after viewing the contents, it is important that scenes have been marked and annotated, perhaps with an impression annotation. We consider iVAS the best tool for that.

The system then extracts the target scene of the video article from the annotation produced by user when he or she used iVAS in real time. The system recommends interesting scenes for the user, and offers a template on which to write a Weblog entry for the video contents. Moreover, the system automatically associates a user's Weblog entry with interesting scenes for the user by linking trackbacks. The user describes the entry for these scenes based on this recommendation result.

The mechanism for recommending target scenes of the video article is roughly as follows. First, we introduce an interest level that shows how much interest the user has in the scene. The more users give annotations to the scene, the more the interest level rises. Concretely, the interest level is high for scenes that have had text annotations or many impression annotations made about them. Furthermore, the system recommends and ranks scenes that generate high interest.

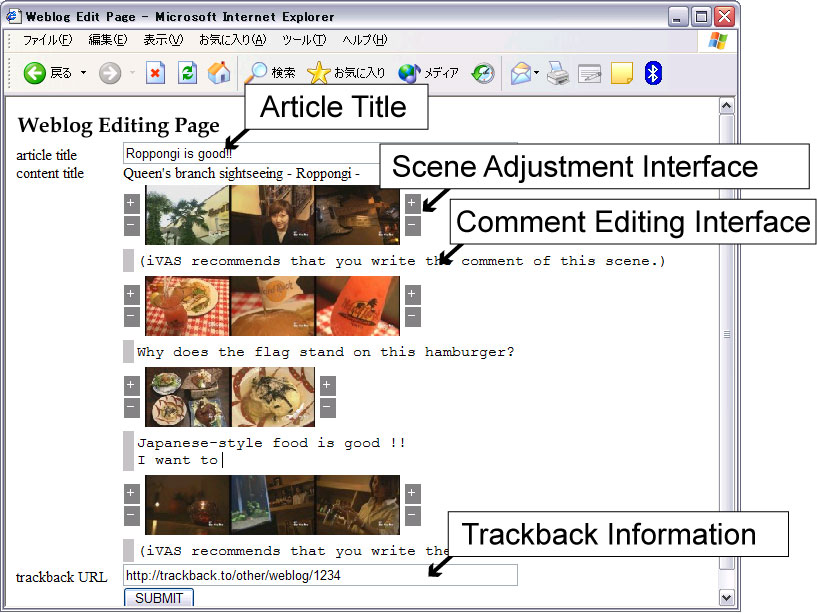

3.2 Updating Video Article and Annotation

Users can correct video articles generated semi-automatically just as they would for a conventional Weblog entry. This correction result is reflected in iVAS as text annotations, and is used to improve the accuracy of applications such as video retrieval systems. We can correct the following two items. The first is correcting the range of the targeted annotation scene: we can correct the start and the end points of the scene. The second is correcting text. Figure \ref{weblogwin} shows an example of the interface. The system records the corrected result in the annotation database as an annotation of the superscription attribute. As a result, we can acquire highly reliable annotations corrected by a human hand.

図3: Weblog Editing Page for Video Article

4 Application based on Video Annotation

By considering the information that this system obtains as video annotations, we can extract knowledge from video contents and employ it for various services.

To date there have been several studies focusing on video annotation. For instance, Informedia \cite{Wactlar96} automatically makes annotations based on the machine processing of voice recognition technology and image recognition technology. Because analytical techniques and analytical accuracy differ depending on the type of contents, it is difficult to apply this mechanism to general contents. By manually supplementing and correcting annotations, however, some researchers have been able to achieve detailed annotations \cite{Davis93,IBM00,Nagao02}. Unfortunately, producing detailed annotations consumes a high human cost.

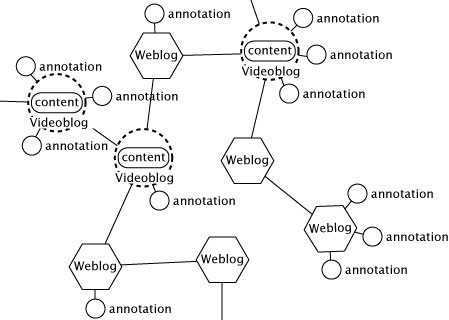

On the other hand, we focus on the point that a lot of users watch the video contents, and automatically extract annotations from natural intelligent operations such as bulletin-board communications and Weblog descriptions among others. Because a variety of annotations is acquired from many users, the annotation cost is low. Moreover, we can acquire comments and users' impressions, which are difficult to obtain through image and voice recognition. There are some related studies on electronic bulletin board-style video annotation, such as one of our previous studies \cite{Yamamoto04b} and the research of Brgeron \cite{Brgeron99}. These investigations, however, discuss only stand-alone video contents. By linking the video contents and those of Weblogs by trackbacks, our proposed system can form a novel Weblog network (Figure \ref{weblognet}). By using the semantic relation between an article on a reference origin and a video content on a reference head, we can extract many varieties of annotations. For example, the content of an article referring to a specific scenes of video contents can be indirectly treated as a comment annotation to the video. Moreover, two or more contents referred from similar Weblog articles feature some similarity, just like the similarity shared between articles.

Using these annotation, we can leverage semantic retrieval and summarization \cite{Nagao02,Yamamoto04b} based on video annotations. Also, analytical research on Weblog communities \cite{Kumar03} and trend analysis \cite{Glance04} is applicable to video contents by employing the features of Weblogs.

図4: Weblog Network

5 Conclusion

The proposed mechanism can link video contents, Weblogs, and users. These mechanisms have the following advantages. By linking video contents, users, and Weblogs that users view regularly, we can activate communities and promote the circulation of contents. In addition, we can use the system for applications based on annotation by extracting the video annotations from the contents. In our future works, we intend to open this system to the public and conduct experiments in a real environment.